We are thrilled to unveil Bacula Enterprise 18.0.9, the newest standard release.

For comprehensive details, please see the release notes: https://docs.baculasystems.com/BEReleaseNotes/RN18.0/index.html#release-18-0-9-26-august-2025

To access the latest Bacula Enterprise version, please log into the customer portal (https://tickets.baculasystems.com) and select ‘New version 18.0.9!’ located at the top-right corner.

Why Is Ransomware a Critical Threat in Modern Cybersecurity?

Ransomware attacks have been causing major disruptions on numerous organizations for more than a decade now, leading to the loss of critical and sensitive data, among other unfortunate consequences, such as huge amounts of money being paid to criminal organizations. Nearly all major industries are regularly affected by ransomware in some way or another. Ransomware attacks reached 5,263 incidents in 2024 – the highest recorded number since the tracking began back in 2021, and the number in question seems to keep growing on a yearly basis.

While preventive measures remain a key part of ransomware defense, maintaining regular data backups serves as the most effective means of data recovery following an attack. Data protection is paramount: because many forms of ransomware actually target the backed-up data too, necessitating measures to safeguard backups from ransomware is an absolute must. Recent research also shows that attackers attempted to compromise the backups of 94% of organizations hit by ransomware, making backup protection more critical than ever.

Ransomware is a type of malware that infiltrates a victim’s computer or server(s), encrypts their data, and renders it inaccessible. The perpetrators then demand a ransom payment – typically in the form of cryptocurrency – in exchange for the decryption key. Ransomware attacks tend to have devastating consequences, causing significant financial losses, disrupting productivity and even causing bankruptcy. The 2024 Change Healthcare attack (major U.S. healthcare provider) alone resulted in $3.09 billion in damages and compromised the protected health information of over 100 million individuals.

Upon successful encryption of the targeted files, the attackers usually demand a ransom. Ransom payments are often requested in bitcoins or other cryptocurrencies, making them difficult for law enforcement to trace. If the victim fails to comply with the ransom demands, the attackers may resort to further threats, such as publishing the encrypted files online or permanently deleting them.

Who Are the Primary Targets of Ransomware Attacks?

While the statement about practically any device being a potential target for ransomware, there are some categories of information and user groups that ransomware creators tend to target the most:

- Government bodies – primary targets for most ransomware variations due to the sheer amount of sensitive data they hold; it is also a common assumption that the government would rather pay the ransom than let the sensitive data of political significance be released to the public or sold to third parties.

- Healthcare organizations – a notable target for ransomware due to the large number of healthcare infrastructures relying on outdated software and hardware, drastically reducing the amount of effort necessary to break all of its protective measures; healthcare data is also extremely important due to its direct connection with the livelihood of hundreds or thousands of patients, which makes it even more valuable.

- Mobile devices – a common target due to the nature of modern smartphones and the amount of data a single smartphone tends to hold (be it personal videos and photos, financial information, etc.).

- Academic institutions – one of the biggest targets for ransomware, due to the combination of working with large volumes of sensitive data and having smaller IT groups, budget constraints, and other factors that contribute to the overall lower effectiveness of their security systems.

- Human resources departments – may not have a lot of valuable information by themselves but are compensated by having access to both the financial and personal records of other employees; also a common target due to the nature of work itself (opening hundreds of emails per day makes it a lot more likely for an average HR employee to open an email with malware in it).

The number of ransomware attacks seems to be growing at an alarming pace. Financial services organizations saw 65% targeted by ransomware in 2024, with attackers attempting to compromise backups in 90% of these attacks. New ransomware types and variations are also being developed regularly. There is now an entirely new business model called RaaS, or Ransomware-as-a-Service, offering constant access to the newest malicious software examples for a monthly fee, greatly simplifying the overall ransomware attack process.

The latest information from Statista confirms the statements above in terms of the biggest targets for ransomware as a whole – government organizations, healthcare, finance, manufacturing, and so on. Financial organizations also seem to be steadily growing as one of the biggest ransomware targets so far.

There are ways to protect your company against various ransomware attacks, and the first, critical one is to make sure you have ransomware-proof backups.

What Are the Different Types of Ransomware?

Let’s first look at the different types of threats. Encryption-related ransomware (cryptoware) is one of the more widespread types of ransomware in the current day and age. Notable but less widespread examples of ransomware types include:

- Lock screens (interruption with the ransom demand, but with no encryption),

- Mobile device ransomware (cell-phone infection),

- MBR encryption ransomware (infects a part of Microsoft’s file system that is used to boot the computer, preventing the user from accessing the OS in the first place),

- Extortionware/leakware (targets sensitive and compromising data, then demands ransom in exchange for not publishing the targeted data), and so on.

The frequency of ransomware attacks is set to increase dramatically in 2022 and beyond, and with increasing sophistication. Recent data shows that the average ransom payment reached $2.73 million in 2024, a dramatic increase from $400,000 in 2023. This steep escalation reflects both the growing sophistication of attacks and attackers’ improved understanding of victim organizations’ financial capabilities.

While preventive measures are the preferred way to deal with ransomware, they are typically not 100% effective. For attacks that are able to penetrate organizations, backup is their last bastion of defence. Data backup and recovery are proven to be an effective and critical protection element against the threat of ransomware. However, being able to effectively recover data means maintaining a strict data backup schedule and taking various measures to prevent your backup from also being captured and encrypted by ransomware.

For an enterprise to sufficiently protect backups from ransomware, advance preparation and thought is required. Data protection technology, backup best practices and staff training are critical for mitigating the business-threatening disruption that ransomware attacks inflict on an organization’s backup servers.

Double-extortion and data exfiltration

Double-extortion represents the most significant evolution in ransomware tactics over the past five years, fundamentally changing how organizations must approach data protection. Unlike traditional encryption-only attacks where backups provide complete recovery, double-extortion creates liability that persists even after successful restoration.

Attackers now routinely exfiltrate sensitive data during reconnaissance phases – often weeks before deploying encryption payloads. This stolen data becomes permanent leverage. Organizations with perfect backups still face threats of public data dumps, regulatory penalties, competitive intelligence loss, and reputational damage.

Recent high-profile attacks demonstrate this threat: attackers published stolen patient records, customer databases, and proprietary research even after victims refused to pay, causing regulatory fines exceeding tens of millions of dollars. The threat model has fundamentally shifted – ransomware is no longer just an availability attack solved by backups, but a confidentiality breach requiring comprehensive data protection.

Target priorities include:

- customer personally identifiable information (PII)

- financial records

- intellectual property

- employee data

- healthcare records

- any information subject to regulatory protection

Attackers assess data value before selecting victims, specifically targeting organizations holding sensitive information with publication consequences.

Defense requires layering backup strategies with data exfiltration prevention. Data loss prevention (DLP) systems monitor and block unusual data transfers. Network segmentation limits lateral movement between data repositories. Encryption at rest renders stolen files unusable without corresponding keys. Zero-trust architectures verify all access attempts regardless of source.

Backups remain essential for operational recovery but address only half the double-extortion threat. Comprehensive ransomware defense acknowledges that modern attacks create dual risks requiring dual protections – backup resilience for encryption and access controls for exfiltration.

What Are Common Myths About Ransomware and Backups?

If you are researching how to protect backups from ransomware, you might come across wrong or out of date advice. The reality is that ransomware backup protection is a little more complex, so let’s look into some of the most popular myths surrounding the topic.

Ransomware Backup Myth 1: Ransomware doesn’t infect backups. You might think your files are safe. However, not all ransomware activates as you are infected. Some wait before they get started. This means your backups may contain a copy of the ransomware in them.

Ransomware Backup Myth 2: Encrypted backups are protected from ransomware. It doesn’t really matter if your backups are encrypted. As soon as you run a backup recovery, the potential infection becomes executable again and activates.

Ransomware Backup Myth 3: Only Windows is affected. Many people think it is possible to run their backups on a different operating system to eliminate the threat. Unfortunately, if the infected files are hosted on the cloud, the ransomware would cross over.

Ransomware Backup Myth 4: Paying the ransom money is easier and cheaper than investing in data recovery systems. There are two strong arguments against this. Number one – companies that pay the ransom are perceived as vulnerable and unwilling to fight against ransomware attacks. Number two – paying the ransom money in full is not even close to being a guaranteed way to acquire decryption keys.

Ransomware Backup Myth 5: Ransomware attacks are mostly done for the sake of revenge against large enterprises that mistreat regular people. There is a connection that could be made between companies with questionable customer policies and revenge attacks, but the vast majority of attacks are simply looking for anyone to take advantage of.

Ransomware Backup Myth 6: Ransomware does not attack smaller companies and only targets large corporations. While bigger companies might be bigger targets due to a potentially bigger ransom that could be acquired from them, smaller companies are getting attacked by ransomware just as often as bigger ones – and even private users get a significant number of ransomware attacks on a regular basis. A report from Sophos shows that this particular myth is just not true, since both large-scale and small companies have a roughly the same percentage of them being affected by ransomware in a year’s time (72% for enterprises with $5 billion revenue and 58% for businesses with less than $10 million in revenue).

Of course, there are still many ways in which you protect backups from ransomware. Below are some important strategies you should consider for your business.

What Are the Best Methods of Protecting Backups from Ransomware?

Here are some specific technical considerations for your enterprise IT environment, to protect your backup server against future ransomware attacks:

Use Unique, Distinct Credentials

Backup systems require dedicated authentication that exists nowhere else in your infrastructure. Ransomware attacks frequently begin by compromising standard administrative credentials, then using those same credentials to locate and destroy backup repositories. When backup storage shares authentication with production systems, a single credential breach exposes everything.

Access control requirements:

- Enable multi-factor authentication for any human access to backup infrastructure – hardware tokens or authenticator applications provide stronger protection than SMS-based codes

- Implement role-based access control separating backup operators (who execute jobs and monitor completion) from backup administrators (who configure policies and storage)

- Grant minimum required permissions for each function – file read access for backup agents, write access to specific storage locations, nothing more

- Avoid root or Administrator privileges for backup operations, which give ransomware unnecessary system access

Create service accounts exclusively for backup operations with no other system access. These accounts should never log into workstations, email systems, or other applications. Bacula’s architecture enforces this separation by default, running its daemons under dedicated service accounts that operate independently from production workloads.

Monitor authentication logs for backup system access, particularly failed login attempts, access from unusual locations, or credential use outside normal backup windows. Audit privileged actions like retention policy changes or backup deletions – legitimate changes happen infrequently, making unauthorized activity obvious.

Offline storage

Offline storage is one of the best defenses against the propagation of ransomware encryption to the backup storage. There are a number of storage possibilities that are worth mentioning:

| Media Type |

What’s Important |

| Cloud target backups |

These use a different authentication mechanism. Is only partially connected to the backup system. Using cloud target backups is a good way to protect backups from ransomware because your data is kept safe in the cloud. In the case of an attack, you will restore your system from it, although that may prove expensive. You should also keep in mind that syncing with local data storage uploads the infection to your cloud backup too. |

| Primary storage Snapshots |

Snapshots have a different authentication framework and is used for recovery. Snapshot copies are read-only backups, so new ransomware attacks can’t infect them. If you identify a threat, you simply restore it from one taken before the strike took place. |

| Replicated VMs |

Best when controlled by a different authentication framework, such as using different domains for say, vSphere and Hyper-V hosts, and Powered off. You just need to make sure you are keeping careful track of your retention schedule. If a ransomware attack happens and you don’t notice it before your backups are encrypted, you might not have any backups to restore from. |

| Hard drives/SSD |

Detached, unmounted, or offline unless they are being read from, or written to. Some solid-state drives have been cracked open with malware, but this goes beyond the reach of some traditional backup ransomware. |

| Tape |

You can’t get more offline than with tapes which have been unloaded from a tape library. These are also convenient for off-site storage. Since the data is usually kept off-site, tape backups are normally safe from ransomware attacks and natural disasters. Tapes should always be encrypted. |

| Appliances |

Appliances, being black boxes, need to be properly secured against unauthorized access to protect against ransomware attacks. Stricter network security than with regular file servers is advisable, as appliances may have more unexpected vulnerabilities than regular operating systems. |

Backup Copy Jobs

A Backup Copy Job copies existing backup data to another disk system so it is restored later or be sent to an offsite location.

Running a Backup Copy Job is an excellent way to create restore points with retention rules that are different from the regular backup job (and is located on another storage). The backup copy job is a valuable mechanism that helps you protect backups from ransomware because there are different restore points in use with the Backup Copy Job.

For example, if you add an extra storage device to your infrastructure (for instance a Linux server) you would be able to define a repository for it and create a Backup Copy Job to work as your ransomware backup.

Avoid too many file system types

Although involving different protocols is a good way to prevent ransomware propagation, be aware that this is certainly no guarantee against ransomware backup attacks. Different types of ransomware tend to evolve and get more effective on a regular basis, and new types appear quite frequently.

Therefore, it is advisable to use an enterprise-grade approach to security: backup storage should be inaccessible as far as possible, and there should be only one service account on known machines that needs to access them. File system locations used to store backup data should be accessible only by the relevant service accounts to protect all information from ransomware attacks.

Use the 3-2-1-1 rule

Following the 3-2-1 rule means having three distinct copies of your data, on two different media, one of which is off-site. The power of this approach for ransomware backup is that it addresses practically any failure scenario and will not require any specific technologies to be used. In the era of ransomware, Bacula recommends adding a second “1” to the rule; one where one of the media is offline. There are a number of options where you make an offline or semi-offline copy of your data. In practice, whenever you backup to non file system targets, you’re already close to achieving this rule. So, tapes and cloud object storage targets are helpful to you. Putting tapes in a vault after they are written is a long-standing best practice.

Cloud storage targets act as semi-offline storage from a backup perspective. The data is not on-site, and access to it requires custom protocols and secondary authentication. Some cloud providers allow objects to be set in an immutable state, which would satisfy the requirement to prevent them from being damaged by an attacker. As with any cloud implementation, a certain amount of reliability and security risk is accepted by trusting the cloud provider with critical data, but as a secondary backup source the cloud is very compelling.

Verify backup integrity (the 3-2-1-1-0 rule)

The modern 3-2-1-1-0 rule extends traditional backup practices with a critical fifth component: zero errors. This principle emphasizes that backups are only valuable if their successful restoration is guaranteed when needed. The “0” represents verified, error-free backups that have been tested and proven restorable.

Many organizations discover too late that their backups are corrupted, incomplete, or impossible to restore. Ransomware sometimes lies dormant in backup copies for weeks or months before activation, making regular verification essential. Without systematic testing, you may have multiple copies of unusable data rather than functional backups.

Implementing the zero-errors principle requires several practices:

- Automated integrity checks should run after each backup job, verifying checksums and file integrity. These checks catch corruption immediately rather than during a crisis recovery situation.

- Regular restoration testing means periodically restoring data from all backup sources to confirm the process works end-to-end. Test restores should cover different scenarios: individual files, entire systems, and full disaster recovery situations.

- Bandwidth and performance verification ensures your infrastructure will be able to handle full-capacity restores within your recovery time objectives. A backup that takes three weeks to restore may be technically intact but operationally useless.

- Documentation of recovery procedures should be maintained and updated with each test, ensuring staff would still be able to successfully execute recoveries under pressure.

Schedule comprehensive recovery drills quarterly at minimum, testing backups from different time periods and storage locations. Measure and document your actual recovery time objectives (RTO) and recovery point objectives (RPO) during these drills, comparing them against your business requirements. This practice transforms theoretical backup protection into proven, reliable data recovery capability.

Avoid storage snapshots

Storage snapshots are useful to recover deleted files to a point in time, but aren’t backup in the true sense. Storage snapshots tend to lack advanced retention management, reporting, and all the data is still stored on the same system and therefore may be vulnerable to any attack that affects the primary data. A snapshot is no more than a point in time copy of your data. As such, the backup is still vulnerable to ransomware attacks if these were programmed to lie dormant until a certain moment.

Bare metal recovery

Bare metal recovery is accomplished in many different ways. Many enterprises simply deploy a standard image, provision software, and then restore data and/or user preferences. In many cases, all data is already stored remotely and the system itself is largely unimportant. However, in others this is not a practical approach and the ability to completely restore a machine to a point in time is a critical function of the disaster recovery implementation that allows you to protect backups from ransomware.

The ability to restore a ransomware-encrypted computer to a recent point in time, including any user data stored locally, may be a necessary part of a layered defense. The same approach is applied to virtualized systems, although there are usually preferable options available at the hypervisor.

Backup plan testing

Testing backup and recovery procedures transforms theoretical protection into proven capability. Organizations that discover backup failures during actual ransomware incidents face catastrophic data loss and extended downtime. Regular testing identifies problems before emergencies occur.

Establish a structured testing schedule based on data criticality. Test mission-critical systems monthly, important systems quarterly, and standard systems semi-annually. Each test validates different recovery scenarios to ensure comprehensive coverage.

Recovery scenarios to test regularly:

- File-level restoration – Recover individual files and folders from various dates to verify granular recovery capabilities

- System-level restoration – Restore complete servers, databases, or virtual machines to confirm full system recovery

- Bare metal recovery – Rebuild systems from scratch on new hardware to validate disaster recovery procedures

- Cross-platform recovery – Test restoration to different hardware or virtualized environments

- Partial recovery – Restore specific application components or database tables to verify selective recovery options

Document Recovery Time Objective (RTO) and Recovery Point Objective (RPO) for each system during testing. RTO measures how quickly you restore operations – the time between failure and full recovery. RPO measures potential data loss – the time between the last backup and the failure event. Compare actual results against business requirements and adjust backup frequencies or infrastructure accordingly.

Verify bandwidth capacity handles full-scale restores within your RTO targets. A backup system that requires three weeks to restore terabytes of data fails operationally despite technical integrity. Test restoration over production networks during business hours to identify realistic performance constraints.

Maintain detailed documentation of each test including procedures followed, time required, problems encountered, and corrective actions taken. Update runbooks based on test findings to ensure staff execute recoveries efficiently during actual incidents. Rotate testing responsibilities among team members to prevent single points of knowledge failure.

Monitoring, alerting and anomaly detection

Continuous monitoring detects ransomware attacks in progress before they destroy backup infrastructure. Attackers typically spend hours or days reconnaissance mapping backup systems, attempting credential access, and testing deletion capabilities before launching full-scale attacks. Monitoring is needed to catch these reconnaissance activities early.

Critical events requiring immediate alerts:

- Failed authentication attempts to backup systems, especially multiple failures from single sources

- Backup deletion requests or retention policy modifications outside change windows

- Unusual backup sizes – dramatic increases suggest data exfiltration, significant decreases indicate corruption or tampering

- Backup job failures across multiple systems simultaneously, signaling coordinated attacks

- Access to backup storage from unauthorized IP addresses or geographic locations

- Encryption key access outside scheduled backup operations

Configure anomaly detection baselines by measuring normal backup patterns over 30-day periods. Establish typical backup sizes, completion times, and access patterns for each system. Integrate backup system logs with Security Information and Event Management (SIEM) platforms for unified visibility. Automate response to critical alerts where possible. Review monitoring data weekly even without alerts.

Immutable storage

Modern ransomware actively seeks and destroys backups before encrypting primary data. Immutable storage counters this threat by creating backup copies that cannot be modified, deleted, or encrypted once written – even by administrators with full system access.

WORM (Write-Once-Read-Many) technology represents the most robust implementation of immutability. When data is stored in WORM format, it becomes permanently locked for a specified retention period. Ransomware that compromises administrator credentials cannot override these protections, making WORM storage immune to credential-based attacks.

Cloud providers offer object-level immutability through services like Amazon S3 Object Lock, Azure Immutable Blob Storage, and Google Cloud Storage Retention Policies. These services lock objects at the API level, preventing deletion or modification requests from any user or application. Configuration requires enabling immutability at the bucket or container level before writing backup data.

Hardware-based WORM solutions include specialized tape libraries and appliances with firmware-enforced write protection. These devices reject modification commands at the hardware level, providing protection independent of software vulnerabilities.

Implementation steps for immutable backups:

- Configure backup software to write to immutable targets immediately after backup completion

- Set retention periods that exceed your longest potential ransomware dormancy period – typically 90 to 180 days based on current threat patterns

- Separate authentication systems for immutable storage from production environments using dedicated service accounts with write-only permissions

- Layer immutable storage with standard backup infrastructure rather than replacing it – immutable copies serve as the final recovery option

- Monitor for unauthorized access attempts and failed deletion requests, which signal active attacks

- Verify that immutability settings remain enforced after system updates

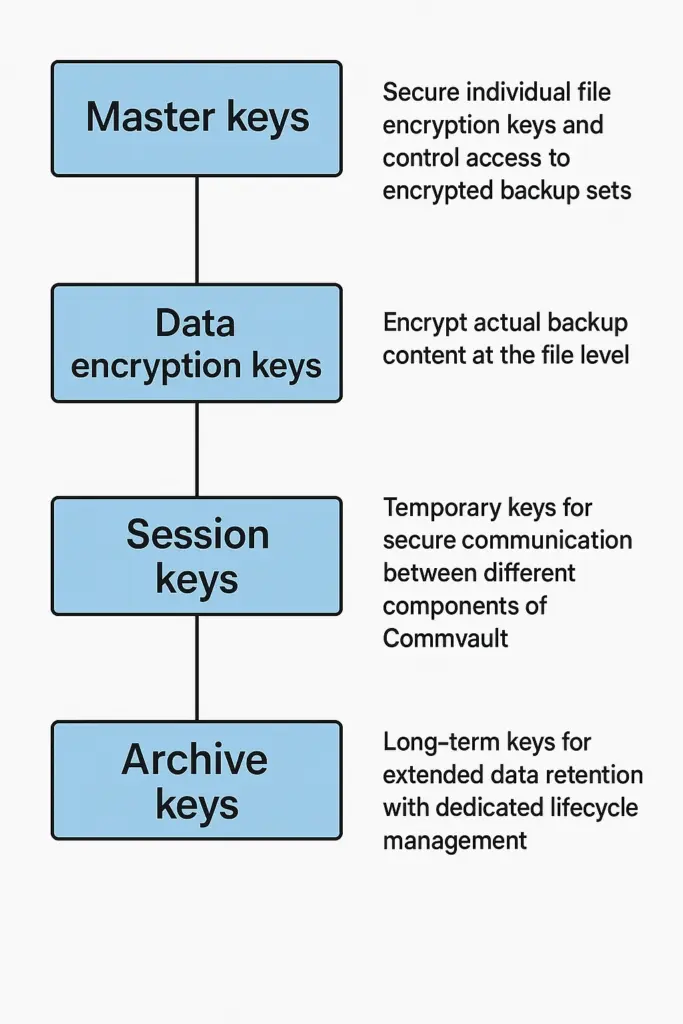

Backup encryption

Encryption protects backup data from unauthorized access and prevents attackers from exploiting stolen backups in double-extortion schemes. Modern ransomware groups increasingly exfiltrate data before encryption, threatening to publish sensitive information unless victims pay additional ransoms. Encrypted backups render stolen data unusable to attackers.

Implement encryption at two critical points:

- data at rest (stored backups)

- data in transit (during backup and restore operations)

AES-256 encryption provides industry-standard protection for stored backup data, offering effectively unbreakable security with current technology. TLS 1.2 or higher secures data moving between backup clients and storage targets, preventing interception during transmission.

Key management practices:

- Store encryption keys separately from backup data – keys stored alongside encrypted backups provide no protection if attackers compromise the storage system

- Use dedicated key management systems (KMS) or hardware security modules (HSMs) that provide tamper-resistant key storage and access logging

- Implement role-based access controls limiting key access to authorized backup administrators only

- Rotate encryption keys annually or after any suspected security incident

- Maintain secure offline copies of encryption keys in physically separate locations – losing keys means permanent data loss regardless of backup integrity

- Document key recovery procedures and test them regularly to ensure keys remain accessible during disasters

- Enable multi-factor authentication for all key management system access

Separate key management credentials from backup system credentials. Attackers who compromise backup administrator accounts should not automatically gain encryption key access. This separation creates an additional barrier requiring attackers to breach multiple authentication systems.

For organizations subject to regulatory requirements, encryption addresses compliance mandates including GDPR, HIPAA, and PCI-DSS. These frameworks require encryption of sensitive data at rest and in transit, making backup encryption legally mandatory rather than optional for regulated industries.

Monitor encryption key access logs for unusual activity. Unexpected key retrieval attempts signal potential attacks attempting to decrypt backup data for exfiltration or sabotage.

Backup policies

Reviewing and updating your anti-ransomware backup policies on a regular basis is a notably effective method of minimizing the effect of a ransomware attack or straight-up preventing it. For the backup policy to be effective in the first place – it has to be up-to-date and flexible, including solutions for all of the modern ransomware attack methods.

One of the best defenses against ransomware is a restoration of information from clean backups, since paying a ransom is not a 100% guarantee of your data being decrypted in the first place – signifying the importance of backups once again. Topics that have to be covered when performing a thorough audit of your entire internal data structure include:

- Is the 3-2-1 rule in place?

- Are there any critical systems that are not covered by regular backup operations?

- Are those backups properly isolated so that they are not affected by ransomware?

- Was there ever a practice run of a system being restored from a backup to test how it works?

Disaster recovery planning

A Disaster Recovery Plan (DRP) outlines how your organization responds to threats including ransomware, hardware failures, natural disasters, and human errors. Effective DRPs establish clear procedures before incidents occur, eliminating confusion during high-stress response situations.

Recovery objectives framework:

Recovery Point Objective (RPO) defines acceptable data loss measured in time – how much data you would be able to afford to lose. Recovery Time Objective (RTO) defines acceptable downtime how quickly you must restore operations. Set these targets based on business impact:

- Mission-critical systems (financial transactions, patient records): RPO of 15 minutes to 1 hour, RTO of 1-4 hours

- Important business systems (email, CRM, project management): RPO of 4-8 hours, RTO of 8-24 hours

- Standard systems (file servers, archives): RPO of 24 hours, RTO of 48-72 hours

Backup frequency and infrastructure must support these targets. Systems with 15-minute RPO require continuous replication or frequent snapshots, not daily backups.

Ransomware incident response procedures:

- Isolate affected systems immediately – Disconnect compromised devices from networks to prevent ransomware spread, but leave systems powered on to preserve evidence

- Activate incident response team – Assign roles: incident commander, technical lead, communications coordinator, legal liaison

- Assess scope – Identify all compromised systems, determine ransomware variant, check if backups are affected

- Preserve evidence – Capture memory dumps, logs, and system states before remediation for potential law enforcement involvement

- Verify backup integrity – Test restoration from multiple backup generations to confirm clean recovery points exist

- Execute recovery – Restore from the most recent clean backup, rebuild compromised systems, implement additional security controls before reconnecting to production

Document ransomware payment decisions in advance. Establish criteria for when payment might be considered (life safety systems, no viable backups) versus firm refusal policies. Never negotiate without legal counsel and law enforcement coordination.

Security-centric education for employees

Backups are conducted on both system-wide levels and on individual employee systems, especially when it comes to various emails and other specific info. Teaching your employees about the importance of their participation in the backup process is a great way to close even more gaps in your defense against ransomware.

At the same time, while regular employees help with the backup process, they should not have access to backups themselves whatsoever. The more people have access to backed-up data – the higher the chances are for human error or some other way for your system and your backups to be compromised.

Infrastructure Hardening and Endpoint Protection

Ransomware exploits vulnerabilities in systems to gain initial access and spread laterally through networks. Comprehensive infrastructure hardening closes these entry points and limits attacker movement even when perimeter defenses fail.

Patch management forms the foundation of infrastructure security. Establish automated patch deployment for operating systems, applications, and firmware within 72 hours of release for critical vulnerabilities. Prioritize patches addressing known ransomware exploits and remote code execution flaws. Maintain an inventory of all systems to ensure nothing falls through patching gaps.

Endpoint detection and response (EDR) solutions provide real-time monitoring and threat detection on workstations and servers. EDR tools identify ransomware behavior patterns – such as rapid file encryption, unusual process execution, or attempts to delete shadow copies – and automatically isolate infected endpoints before ransomware spreads. Deploy EDR across all endpoints including backup servers and administrative workstations.

Attack surface reduction eliminates unnecessary access points. Each removed service or closed port represents one less vulnerability for attackers to exploit. As such, disable unused services, close unnecessary network ports, remove legacy protocols, and uninstall software that poses security risks. Also implement application whitelisting to prevent unauthorized executables from running.

Vulnerability scanning identifies security gaps before attackers do. Schedule weekly automated scans of all systems, prioritizing remediation based on exploit likelihood and potential impact. Pay particular attention to backup infrastructure, storage systems, and authentication servers – the high-value targets in ransomware campaigns.

Security awareness training addresses the human element. Train employees to recognize phishing attempts, suspicious attachments, and social engineering tactics quarterly. Simulated phishing exercises identify users requiring additional training. Email and phishing attacks accounted for 52.3% of ransomware incidents in 2024, making employee vigilance critical.

Regular infrastructure hardening audits verify that security configurations remain enforced. Systems drift from secure baselines over time through legitimate changes and misconfigurations – periodic audits catch these deviations before attackers exploit them.

Air gapping

Air-gapped backups provide physical isolation that makes them unreachable through network-based attacks. This approach physically disconnects backup storage from all networks, cloud infrastructure, and connectivity during non-backup periods, creating an absolute barrier against remote ransomware infiltration.

Ransomware spreads through network connections, scanning for accessible storage and backup repositories. Air-gapped storage eliminates this attack vector entirely – if the storage device has no network connection, ransomware would not be able to reach it regardless of credential compromise or zero-day exploits.

Implementing air-gapped backups:

- Use removable storage devices such as external hard drives, NAS appliances, or tape media

- Connect devices to backup systems only during scheduled backup windows, then physically disconnect immediately after completion

- Establish a rotation schedule with multiple storage devices – while one device captures the current backup, previous devices remain completely offline in secure physical locations

- Store disconnected devices in separate physical locations from primary infrastructure to protect against both ransomware and physical disasters

- Configure backups as full copies rather than incremental chains (chain-free backups) that depend on previous generations – this allows recovery from any single device without requiring access to other backup versions

- Automate disconnection using programmatically controlled tape libraries or storage arrays where possible to reduce human error

- Document reconnection procedures thoroughly for high-stress incident response situations

Air-gapped backups suit organizations with defined backup windows and recovery time objectives measured in hours rather than minutes. Real-time applications requiring instant failover need supplementary protection through replicated systems or immutable cloud storage.

Configure backups as full copies rather than incremental chains that depend on previous backups. Chain-free backups allow recovery from any single air-gapped device without requiring access to other backup generations. If ransomware compromises your incremental backup chain, chain-free archives remain independently recoverable.

Amazon S3 Object Lock

Object Lock is a feature of Amazon cloud storage that allows for enhanced protection of information stored within S3 buckets. The feature, as its name suggests, prevents any unauthorized action with a specific object or set of objects for a specific time period, making data practically immutable for a set time frame.

One of the biggest use cases for Object Lock is compliance with various frameworks and compliance regulations, but it is also a useful feature for general data protection efforts. It is also relatively simple to set up – all that is needed is for the end user to choose a Retention Period, effectively turning the data into WORM format for the time being.

There are two main retention modes that S3 Object Lock offers – Compliance mode and Governance mode. The Compliance mode is the least strict of the two, offering the ability to modify the retention mode while the data is “locked”. The Governance mode, on the other hand, prevents most users from tampering with data in any way whatsoever – the only users that are permitted to do anything with the data during the retention time frame are the ones that have special bypass permissions.

It is also possible to use Object Lock to turn on a “Legal Hold” on specific data, it works outside of retention periods and retention mods and prevents the data in question from being tampered with for legal reasons such as litigation.

Zero Trust Security

An ongoing shift from traditional security to data-centric security has introduced many new technologies that offer incredible security benefits, even if there is a price to pay in terms of user experience. For example, a zero-trust security approach is a relatively common tactic for modern security systems, serving as a great protective barrier from ransomware and other potential threats.

The overall zero-trust security approach adopts the main idea of data-centric security, attempting to verify and check all users and devices accessing specific information, no matter what they are and where they are located. This kind of approach focuses on four main “pillars”:

- The principle of least privilege provides each user with as few privileges in the system as possible, trying to mitigate the problem of over privileged access that most industries had for years.

- Extensive segmentation is mostly used to limit the scope of a potential security breach, eliminating the possibility of a single attacker acquiring access to the entire system at once.

- Constant verification is a core principle for zero-trust security, without any kind of “trusted users” list that is used to bypass the security system altogether.

- Ongoing monitoring is also a necessity to make sure that all users are legitimate and real, in case some form of a modern attack program or a bad actor succeeds in bypassing the first layer of security.

Network segmentation for backup infrastructure

Isolating backup systems from production networks prevents ransomware from moving laterally between compromised workstations and backup repositories. When backup infrastructure shares network space with endpoints and servers, attackers use the same pathways to reach both targets.

Deploy backup systems on dedicated network segments using separate VLANs or physical subnets. Configure firewall rules allowing only necessary backup traffic between production and backup networks – typically limited to backup agents initiating connections to backup servers on specific ports. Block all other traffic, particularly production-to-backup administrative protocols like RDP or SSH.

Use separate Active Directory domains or forests for backup infrastructure authentication. Production domain compromise frequently gives attackers enterprise-wide access including backup systems when both share authentication infrastructure. Separate domains require attackers to breach multiple authentication systems independently.

Implement jump hosts or bastion servers as the sole entry point for backup system administration. Administrators connect to the jump host first, then access backup infrastructure from there. This architecture creates a monitored chokepoint for all administrative access and prevents direct connections from potentially compromised workstations.

Network isolation checklist:

- Dedicated VLANs or subnets for backup servers and storage

- Firewall rules restricting backup traffic to necessary ports and directions only

- Separate authentication domains for backup infrastructure

- Jump host requirement for all backup system administration

- Network access control (NAC) preventing unauthorized devices from reaching backup segments

- Regular firewall rule audits removing unnecessary access permissions

How Would Backup System Tools Provide Additional Ransomware Protection?

Going for an augmented approach to the same problem of ransomware-infected backup, it is possible – and advisable – to use backup systems’ tools as an additional means of protecting against attack. Here are five ransomware backup best practises – to further protect a business against ransomware:

- Make sure that backups themselves are clean of ransomware and/or malware. Checking that your backup is not infected should be one of your highest priorities, since the entire usefulness backup as a ransomware protection measure is negated if your backups are compromised by ransomware. Perform regular system patching to close off vulnerabilities in the software, invest in malware detection tools and update them regularly, and try to take your media files offline as fast as possible after changing them. In some cases, you might consider a WORM approach (Write-One-Read-Many) to protect your backups from ransomware – a specific type of media that is only provided for certain tape and optical disk types, as well as a few cloud storage providers.

- Do not rely on cloud backups as the only backup storage type. While cloud storage has a number of advantages, it is not completely impervious to ransomware. While harder for an attacker to corrupt data physically, it is still possible for ransomware attackers to gain access to your data either using a shared infrastructure of the cloud storage as a whole, or by connecting said cloud storage to an infected customer’s device.

- Review and test your existing recovery and backup plans. Your backup and recovery plan should be tested on a regular basis to ensure you’re protected against threats. Finding out that your recovery plan is not working as intended only after a ransomware attack is clearly undesirable. The best ransomware backup strategy is the one that will never have to deal with malicious data breaches. Work through several different scenarios, time-check some of your restoration-related results such as time-to-recovery, and establish which parts of the system are prioritized by default. Remember, many businesses measure the cost of services being down in dollars-per-minute and not any other metric.

- Clarify or update retention policies and develop backup schedules. A regular review of your ransomware backup strategies is strongly recommended. It may be that your data is not backed up often enough, or that your backup retention period is too small, making your system vulnerable to more advanced types of ransomware that target backup copies via time delays and other means of infection.

- Thoroughly audit all of your data storage locations. To protect backups from ransomware, these should be audited to be sure that no data is lost and everything is backed up properly – possibly including end-user systems, cloud storages, applications and other system software.

How Does Ransomware Target and Compromise Your Backups?

While it is true that backup and recovery systems are capable of protecting organizations against ransomware in most cases, these systems are not the only ones that keep progressing and evolving over the years – because ransomware also gets more and more unusual and sophisticated as the time passes.

One of the more recent problems of this whole approach with backups is that now a lot of ransomware variations have learned to target and attack not only the company’s data in the first place, but also the backups of that same company – and this is a significant problem for the entire industry. Many ransomware writers have modified their malware to track down and eliminate backups. From this perspective, while backups still protect your data against ransomware – you will also have to protect backups from ransomware, too.

It is possible to figure out some of the main angles that are typically used to tamper with your backups as a whole. We will highlight the main ones and explain how you use them to protect backups from ransomware:

Ransomware damage potential increase with longer recovery cycles

While not as obvious as other possibilities, the problem of long recovery cycles is still a rather big one in the industry, and it’s mostly caused by outdated backup products that only perform slow full backups. In these cases, recovery cycles after ransomware attack take days, or even weeks – and it’s a severe disruption for the majority of companies, as system downtime and production halt costs quickly overshadow initial ransomware damage estimates.

Two possible solutions here to help protect your backups from ransomware would be: a) to try and get a solution that provides you a copy of your entire system as quickly as possible, so you don’t have to spend days or even weeks in recovery mode, and b) to try and get a solution that offers mass restore as a feature, getting multiple VMs, databases and servers up and running again very quickly.

Your insurance policy may also become your liability

As we have mentioned before, more and more ransomware variations appear that target both your original data and your backups, or sometimes even try to infect and/or destroy your backed up data before moving to its source. So you need to make it as hard as possible for ransomware to eliminate all of your backup copies – a multi-layered defense, of sorts.

Cybercriminals are using very sophisticated attacks that target data, going straight for your backups as that’s your main insurance policy to keep your business running. You should have a single copy of the data in such a state that it is never mounted by any external system (often referred to as an immutable backup copy), and implement various comprehensive security features, like the aforementioned WORM, as well as modern data isolation, data encryption, tamper detection and monitoring for data behavior abnormality.

There are two measures here that we will go over in a bit more detail:

- Immutable backup copy. Immutable backup copy is one of the bigger measures against ransomware attacks – it’s a copy of your backup that cannot be altered in any way once you’ve created it. It exists solely to be your main source of data if you have been targeted by ransomware and need your information back as it was before. Immutable backups cannot be deleted, changed, overwritten, or modified in any other way – only copied to other sources. Some vendors pitch immutability as foolproof – but in terms of ransomware backup, there is no such thing. But you should not fear immutable backup ransomware attacks. Just ensure you have a comprehensive strategy that includes attack detection and prevention, and implement strong credential management.

- Backup encryption. It is somewhat ironic that encryption is also used as one of the measures to counter ransomware attacks – since a lot of ransomware uses encryption to demand ransom for your data. Encryption doesn’t make your backups ransomware-proof, and it won’t prevent exploits. However, in its core, backup encryption is supposed to act as one more measure against ransomware, encrypting your data within backups so that ransomware cannot read or modify it in the first place.

Visibility issues of your data become an advantage for ransomware

By its nature, ransomware is at its most dangerous when it gets into a poorly managed infrastructure – “dark data”, of sorts. In there, it does a lot of damage, a ransomware attack encrypts your data and/or sells it on the dark web. This is a significant problem that requires the most cutting-edge technologies to detect and combat effectively.

While early detection of ransomware is possible with only a modern data management solution and a good backup system, detecting such threats in real-time requires a combination of machine learning and artificial intelligence – so that you receive alerts about suspicious ransomware activity in real-time, making attack discovery that much faster.

Data fragmentation is a serious vulnerability

Clearly, a lot of organizations deal with large amounts of data on a regular basis. However, the size is not as much of a problem as fragmentation – it’s not uncommon for one company’s data to be located in multiple different locations and using a number of different storage types. Fragmentation also creates large caches of secondary data (not always essential to business operations) that affect your storage capabilities and make you more vulnerable.

Each of these locations and backup types are adding another potential venue for ransomware to exploit your data – making the entire company’s system even harder to protect in the first place. In this case it is a good recommendation to have a data discovery solution working within your system which brings many different benefits – one of which is better visibility for the entirety of your data, making it far easier to spot threats, unusual activity and potential vulnerabilities.

User credentials are used multiple times for ransomware attacks

User credentials have always been one of the biggest problems in this field, providing ransomware attackers with clear access to valuable data within your company – and not all companies are capable of detecting the theft in the first place. If your user credentials become compromised, ransomware attackers leverage the different open ports and gain access to your devices and applications. The entire situation with user credentials became worse when, because of Covid, businesses were forced to largely switch to remote work around in 2019 – and this problem is still as present as ever.

These vulnerabilities even affect your backups and leave them more exposed to ransomware. Typically the only way to combat this kind of gap in security is to invest into strict user access controls – including features such as multi-factor authentication, role-based access controls, constant monitoring, and so on.

Always test and re-test your backups

Many companies only realize their backups have failed or are too difficult to recover only after they have fallen victim to a ransomware attack. If you want to ensure your data is protected, you should always do some kind of regular exercise and document the exact steps for creating and restoring your backups.

Because some types of ransomware also remain dormant before encrypting your information, it’s worth testing all your backup copies regularly – as you might not know when precisely the infection took place. Remember that ransomware will only continue to find more complex ways to hide and make your backup recovery efforts more costly.

Conclusion

For maximum protection of your backup against ransomware and similar threats, Bacula Systems’ strong advice is that your organization fully complies with the data backup and recovery best practices listed above. The methods and tools outlined in this blog post are used by Bacula’s customers on a regular basis to successfully protect their backups from ransomware. For companies without advanced-level data backup solutions, Bacula urges these organizations to conduct a full review of their backup strategy and evaluate a modern backup and recovery solution. Bacula is generally acknowledged in the industry to have exceptionally high levels of security in its backup software. Contact Bacula now for more information.

Key Takeaways

- Modern ransomware attacks target backup systems in most cases, making backup protection as critical as protecting production data

- Implement the 3-2-1-1-0 rule with three copies of data on two media types, one off-site, one immutable or offline, and zero errors through regular verification and recovery testing

- Immutable storage using WORM technology and air-gapped backups create multiple defense layers that prevent attackers from deleting or encrypting backup copies even with compromised administrator credentials

- Separate backup infrastructure from production networks using dedicated VLANs, distinct authentication domains, and strict access controls with multi-factor authentication to prevent lateral ransomware movement

- Double-extortion tactics mean backups alone cannot protect against data theft and publication threats—organizations need comprehensive strategies including encryption, data loss prevention, and network segmentation

- Regular recovery testing with documented RTO and RPO metrics transforms theoretical backup protection into proven capability, while continuous monitoring detects reconnaissance activities before attackers destroy backup infrastructure

Download Bacula’s white paper on ransomware protection

What is High Performance Computing Security and Why Does It Matter?

High Performance Computing (HPC) is a critical infrastructure backbone for scientific discovery, artificial intelligence advancement, and national economic competitiveness. As these systems process increasingly sensitive research data and support mission-critical computational workloads, traditional enterprise security approaches fall short of addressing the unique challenges inherent in HPC environments. Knowing how to work with these fundamental differences is essential for implementing effective security measures that protect valuable computational resources without compromising overall productivity.

High Performance Computing refers to the practice of using supercomputers and parallel processing techniques to solve highly complex computational problems that demand enormous processing power. These systems typically feature thousands of interconnected processors, specialized accelerators like GPUs, and high-speed networking infrastructure capable of performing quadrillions of calculations per second. HPC systems support critical applications across a multitude of domains:

- Scientific research and modeling – Climate simulation, drug discovery, nuclear physics, and materials science

- Artificial intelligence and machine learning – Training large language models, computer vision, and deep learning research

- Engineering and design – Computational fluid dynamics, structural analysis, and product optimization

- Financial modeling – Risk analysis, algorithmic trading, and economic forecasting

- National security applications – Cryptographic research, defense modeling, and intelligence analysis

The security implications of HPC systems extend far beyond typical IT infrastructure concerns. A successful attack on an HPC facility could result in intellectual property theft worth billions of dollars – compromising sensitive research data, disrupting critical scientific programs, or even being classified as national security breaches.

Why HPC Security Standards and Architecture Matter in Modern Facilities

HPC security differs fundamentally from enterprise IT through architectural complexity and performance-first design. Unlike conventional business infrastructure, HPC systems prioritize raw computational performance while managing hundreds of thousands of components, creating expanded attack surfaces difficult to monitor comprehensively. Traditional security tools cannot handle the volume and velocity of HPC operations, while performance-sensitive workloads make standard security controls like real-time malware scanning potentially destructive to petabyte-scale operations.

Before NIST SP 800-223 and SP 800-234, organizations lacked comprehensive, standardized guidance tailored to HPC environments. Now, these complementary standards address this knowledge gap using a foundational four-zone reference architecture that acknowledges distinct security requirements across access points, management systems, compute resources, and data storage. It even documents HPC-specific attack scenarios such as credential harvesting and supply chain attacks.

Real-world facilities exemplify these challenges. Oak Ridge National Laboratory systems contain hundreds of thousands of compute cores and exabyte-scale storage while balancing multi-mission requirements supporting unclassified research, sensitive projects, and classified applications. They accommodate international collaboration and dynamic software environments that traditional enterprise security approaches cannot effectively address.

The multi-tenancy model creates additional complexity as HPC users require direct system access, custom software compilation, and arbitrary code execution capabilities. This demands security boundaries balancing research flexibility with protection requirements across specialized ecosystems including scientific libraries, research codes, and package managers with hundreds of dependencies.

How Do We Understand HPC Security Architecture and Threats?

HPC security requires a fundamental shift in perspective from traditional enterprise security models. The unique architectural complexity and threat landscape of high-performance computing environments demand specialized frameworks that acknowledge the existing tensions between computational performance and security controls.

NIST SP 800-223 provides the architectural foundation by establishing a four-zone reference model that recognizes the distinct security requirements across different HPC system components. This zoned approach acknowledges that blanket security policies are not effective enough when it comes to addressing the varying threat landscapes and operational requirements found in access points, management systems, compute resources, and data storage infrastructure.

The complementary relationship between NIST SP 800-223 and SP 800-234 creates a comprehensive security framework specifically tailored for HPC environments. Here, SP 800-223 defines the architectural structure and identifies key threat scenarios, while SP 800-234 provides detailed implementation guidance through security control overlays that adapt existing frameworks to HPC-specific operational context.

A dual-standard approach like this addresses critical gaps in HPC security guidance by providing both conceptual architecture and practical implementation details. With it, organizations move beyond adapting inadequate enterprise security frameworks to implementing purpose-built security measures that protect computational resources without compromising research productivity or scientific discovery missions.

What Does NIST SP 800-223 Establish for HPC Security Architecture?

NIST SP 800-223 provides the foundational architectural framework that transforms HPC security from ad-hoc implementations to structured, zone-based protection strategies. This standard introduces a systematic approach to securing complex HPC environments while maintaining the performance characteristics essential for scientific computing and research operations.

How Does the Four-Zone Reference Architecture Work?

The four-zone architecture recognizes that different HPC components require distinct security approaches based on their operational roles, threat exposure, and performance requirements. This zoned model replaces one-size-fits-all security policies with targeted protections that acknowledge the unique characteristics of each functional area.

| Zone |

Primary Components |

Security Focus |

Key Challenges |

| Access Zone |

Login nodes, data transfer nodes, web portals |

Authentication, session management, external threat protection |

Direct internet exposure, high-volume data transfers |

| Management Zone |

System administration, job schedulers, configuration management |

Privileged access controls, configuration integrity |

Elevated privilege protection, system-wide impact potential |

| Computing Zone |

Compute nodes, accelerators, high-speed networks |

Resource isolation, performance preservation |

Microsecond-level performance requirements, multi-tenancy |

| Data Storage Zone |

Parallel file systems, burst buffers, petabyte storage |

Data integrity, high-throughput protection |

Massive data volumes, thousands of concurrent I/O operations |

The Access Zone serves as the external interface that must balance accessibility for legitimate users with protection against external threats. Security controls here focus on initial access validation while supporting the interactive sessions and massive data transfers essential for research productivity.

Management Zone components require elevated privilege protection since compromise here could affect the entire HPC infrastructure. Security measures emphasize administrative access controls and monitoring of privileged operations that control system behavior and resource allocation across all zones.

The High-Performance Computing Zone faces the challenge of maintaining computational performance while protecting shared resources across multiple concurrent workloads. Controls must minimize overhead while preventing cross-contamination between different research projects that share the same physical infrastructure.

Data Storage Zone security implementations aim to protect against data corruption and unauthorized access while maintaining performance in systems handling petabyte-scale storage with thousands of concurrent operations from distributed compute nodes.

What Are the Real-World Attack Scenarios Against HPC Systems?

NIST SP 800-223 documents four primary attack patterns that specifically target HPC infrastructure characteristics and operational requirements. These scenarios reflect actual threat intelligence and incident analysis from HPC facilities worldwide.

Credential Harvesting

Credential Harvesting attacks exploit the extended session durations and shared access patterns common in HPC environments. Attackers target long-running computational jobs and shared project accounts to establish persistent access that remain undetected for months. The attack succeeds by compromising external credentials through phishing or data breaches, then leveraging legitimate HPC access patterns to avoid detection while maintaining ongoing system access.

Remote Exploitation

Remote Exploitation scenarios focus on vulnerable external services that provide legitimate HPC functionality but create attack vectors into internal systems. Web portals, file transfer services, and remote visualization tools become pivot points when not properly secured or isolated. Attackers exploit these services to bypass perimeter defenses and gain initial foothold within the HPC environment before moving laterally to more sensitive systems.

Supply Chain Attacks

Supply Chain Attacks target the complex software ecosystem that supports HPC operations. Malicious code enters through CI/CD (Continuous Integration / Continuous Deployment) pipelines, compromised software repositories, or tainted dependencies in package management systems like Spack. These attacks are particularly dangerous because they affect multiple facilities simultaneously and may remain dormant until triggered by specific computational conditions or data inputs.

Confused Deputy Attacks

Confused Deputy Attacks manipulate privileged programs into misusing their authority on behalf of unauthorized parties. In HPC environments, these attacks often target job schedulers, workflow engines, or administrative tools that operate with elevated privileges across multiple zones. The attack succeeds by providing malicious input that causes legitimate programs to perform unauthorized actions while appearing to operate normally.

What Makes HPC Threat Landscape Unique?

The HPC threat environment differs significantly from enterprise IT due to performance-driven design decisions and research-focused operational requirements that create new attack surfaces and defensive challenges.

Trade-offs between performance and security create fundamental vulnerabilities that do not exist in traditional IT environments. Common performance-driven compromises include:

- Disabled security features – Address Space Layout Randomization, stack canaries, and memory protection removed for computational efficiency

- Unencrypted high-speed interconnects – Latency-sensitive networks that sacrifice encryption for microsecond performance gains

- Throughput-prioritized file systems – Shared storage systems that minimize access control overhead to maximize I/O performance

- Relaxed authentication requirements – Long-running jobs and batch processing negatively affect multi-factor authentication enforcement

These architectural decisions create exploitable conditions that attackers leverage to compromise systems that would otherwise be protected in traditional enterprise environments.

Supply chain complexity in HPC environments far exceeds typical enterprise software management challenges. Modern HPC facilities manage 300+ workflow systems with complex dependency graphs spanning scientific libraries, middleware, system software, and custom research codes. This inherent complexity creates multiple entry points for malicious code injection and makes comprehensive security validation extremely difficult to implement and maintain.

Multi-tenancy across research projects complicates traditional security boundary enforcement. Unlike enterprise systems with well-defined user roles and data classification, HPC systems must support dynamic project memberships, temporary collaborations, and varying data sensitivity levels within shared infrastructure. Such a structure creates scenarios where traditional access controls and data isolation mechanisms prove inadequate for research computing requirements.

The emergence of “scientific phishing“ is another important topic – a novel attack vector where malicious actors provide tainted input data, computational models, or analysis workflows that appear legitimate but contain hidden exploits. These attacks target the collaborative nature of scientific research and the tendency for researchers to share data, code, and computational resources across institutional boundaries without going through comprehensive security validation.

What Does NIST SP 800-234’s Security Control Overlay Provide?

NIST SP 800-234 translates the architectural framework of SP 800-223 into actionable security controls specifically tailored for HPC operational realities. This standard provides the practical implementation guidance that transforms theoretical security architecture into deployable protection measures while maintaining the performance characteristics essential for scientific computing.

How Does the Moderate Baseline Plus Overlay Framework Work?

The SP 800-234 overlay builds upon the NIST SP 800-53 Moderate baseline by applying HPC-specific tailoring to create a comprehensive security control framework. This approach recognizes that HPC environments require both established security practices and specialized adaptations that address unique computational requirements.

The framework encompasses 288 total security controls, consisting of the 287 controls from the SP 800-53 Moderate baseline plus the addition of AC-10 (Concurrent Session Control) specifically for HPC multi-user environments. This baseline provides proven security measures while acknowledging that standard enterprise implementations are frequently not enough for HPC operational demands.

Sixty critical controls receive HPC-specific tailoring and supplemental guidance that addresses the unique challenges of high-performance computing environments. These modifications range from performance-conscious implementation approaches to entirely new requirements that don’t exist in traditional IT environments. The tailoring process considers factors such as:

- Performance impact minimization – Controls adapted to reduce computational overhead

- Scale-appropriate implementations – Security measures designed for systems with hundreds of thousands of components

- Multi-tenancy considerations – Enhanced controls for shared research computing environments

- Zone-specific applications – Differentiated requirements across Access, Management, Computing, and Data Storage zones

Zone-specific guidance provides implementers with detailed direction for applying controls differently across the four-zone architecture. Access zones require different authentication approaches than Computing zones, while Management zones need enhanced privilege monitoring that would be impractical for high-throughput Data Storage zones.

The supplemental guidance is an expansion of standard control descriptions using additional HPC context, implementation examples, and performance considerations. This guidance bridges the gap between generic security requirements and the specific operational realities of scientific computing environments.

What Are the Critical Control Categories for HPC?

The overlay identifies key control families that require the most significant adaptation for HPC environments, reflecting the unique operational characteristics and threat landscapes of high-performance computing systems.

Role-Based Access Control

Role-Based Access Control (AC-2, AC-3) receives extensive HPC-specific guidance due to the complex access patterns inherent in research computing. Unlike enterprise environments with relatively static user roles, HPC systems must support dynamic project memberships, temporary research collaborations, and varying access requirements based on computational resource needs. Account management must accommodate researchers who may need different privilege levels across multiple concurrent projects while maintaining clear accountability and audit trails.

HPC-Specific Logging

HPC-Specific Logging (AU-2, AU-4, AU-5) addresses the massive volume and velocity challenges of security monitoring in high-performance environments. Zone-specific logging priorities help organizations focus monitoring efforts on the most critical security events while managing petabytes of potential log data. Volume management strategies include intelligent filtering, real-time analysis, and tiered storage approaches that maintain security visibility without overwhelming storage and analysis systems.

Session Management

Session Management (AC-2(5), AC-10, AC-12) controls are tailored for the unique timing requirements of computational workloads. Long-running computational jobs may execute for days or weeks, requiring session timeout mechanisms that distinguish between interactive debugging sessions and legitimate batch processing. Interactive debugging sessions need different timeout policies than automated workflow execution, while inactivity detection must account for valid computational patterns that might appear inactive to traditional monitoring systems.

Authentication Architecture

Authentication Architecture (IA-1, IA-2, IA-11) guidance addresses when multi-factor authentication should be required versus delegated within established system trust boundaries. External access points require strong authentication, but internal zone-to-zone communication may use certificate-based or token-based authentication to maintain performance while ensuring accountability. The guidance helps organizations balance security requirements with the need for automated, high-speed inter-system communication.

What Zone-Specific Security Implementations Are Recommended?

The overlay provides detailed implementation guidance for each zone in the four-zone architecture, recognizing that security controls must be adapted to the specific operational characteristics and threat profiles of different HPC system components.

Access Zone implementations focus on securing external connections while supporting the high-volume data transfers and interactive sessions essential for research productivity. Security measures include enhanced session monitoring for login nodes, secure file transfer protocols that maintain performance characteristics, and web portal protections that balance usability with security. User session management must accommodate both interactive work and automated data transfer operations without creating barriers to legitimate research activities.

Management Zone protections require additional safeguards for privileged administrative functions that affect system-wide operations. Enhanced monitoring covers administrative access patterns, configuration change tracking, and job scheduler policy modifications. Privileged operation logging provides detailed audit trails for actions that could compromise system integrity or affect multiple research projects simultaneously.

Computing Zone security implementations address the challenge of protecting shared computational resources while maintaining the microsecond-level performance requirements of HPC workloads. Shared GPU resource protection includes memory isolation mechanisms, emergency power management procedures for graceful system shutdown, and compute node sanitization processes that ensure clean state between different computational jobs. Security controls must minimize performance impact while preventing cross-contamination between concurrent research workloads.

Data Storage Zone recommendations focus on integrity protection approaches that work effectively with petabyte-scale parallel file systems. Implementation guidance covers distributed integrity checking, backup strategies for massive datasets, and access control mechanisms that maintain high-throughput performance. The challenge involves protecting against both malicious attacks and system failures that could compromise research data representing years of computational investment.

How Do Organizations Implement HPC Security in Practice?

Moving from standards documentation to operational reality requires organizations to navigate complex implementation challenges while maintaining research productivity. Successful HPC security deployments balance theoretical frameworks with practical constraints, organizational culture, and the fundamental reality that security measures must enhance rather than hinder scientific discovery.

What Is the “Sheriffs and Deputies” Security Model?

The most effective HPC security implementations adopt what practitioners call the “Sheriffs and Deputies” model – a shared responsibility framework that recognizes both facility-managed enforcement capabilities and the essential role of user-managed security practices in protecting computational resources.