Fast Backup Software: Definition and Types

For today’s organizations, fast data backup and recovery represents a tool of vital importance for the execution of their processes and operations, and is often not given the appreciation it merits until we need to make use of it.

One of the reasons for data backup often having only low visibility within an organization is because its services can often go quite some time without being truly employed, and which also operates at times outside the working day, largely unnoticed.

Taking into account these factors, together with often limited resources in IT departments and the fact that data volume has, typically, grown significantly in most organizations due to the use of new technologies such as artificial intelligence, machine learning, Internet of Things (IoT) and Big Data among others, we need to aim for backup to be as fast and efficient as possible. The integrity and completeness of the data must also be assured.

Overall, fast backup software is not only about the speed in the execution of backup and recovery, but must meet other requirements, such as ease of use, easy administration, configurability, and adaptability to changes in requirements, such as:

- Objects to be backed up, not only refers to files or directories, but must also consider attributes and their properties, such as type, size, and location, among others.

- Adjustments to the scheduling that allows it to adapt to ongoing changes and developments in the organizations requirements.

- Manual execution of backup and recovery activities. Although this is a common capability in backup tools and solutions, these should allow administrators to execute jobs with temporary modifications or reconfigurations of the resources defined for them, such as storage, level, destination, etc.

- As a consequence of ever-new requirements in organizations, technological adaptability is paramount, and operational support solutions need to be able to address these changes to allow faster response times and execution. This also facilitates the creation and configuration of new functionalities for customers or servers.

- As new requirements arise, changes can also occur in the hardware resources used for backup applications. A good backup solution must be able to adapt, providing the same level of operation or improving performance in the case of new implementations.

- Provide administrators with the option for the administration and configuration of the bandwidth of the channel or medium where the backups are made, which allows system administrators to define priorities for the execution of backups according to organizational criteria.

- Ability to monitor and restart jobs that have failed to run. This includes quick and effective recovery of situations that may affect the backup window. Additionally, its execution should be integrated and complete.

It is worth noting, that even if all the above mentioned requirements are met, fast backup software often requires:

- Network speed. It is of vital importance that the network used for the backups meet the necessary performance requirements. This may require adaptation and compliance of standards in terms of network devices (switches, routers, modems, etc.), cabling, etc.

- Compression of the data. The compression of the data to be backed up allows it to be smaller and more compact, reducing the transfer rates of the packets in the network. Effective and fast backup solutions try not to overload any single element of the platform, but distribute it among all those that are part of it (main server, agents and storage).

- Deduplication. Used in many backup solutions today, it allows to avoid writing duplicate data in the volumes of data that contain the backed up information, taking as a reference previous backups. It can be done through software or hardware.

- It is important to consider the destination of the backups and whether they will be directed to local storage devices or remote sites. For the latter, it is important to consider other elements, which will be discussed later. For all destinations, it is vital to consider the medium through which the data are transported.

- Transfer mechanisms. If remote destinations are chosen for the storage of the backup volumes, different mechanisms and tools can be used to copy them, and each of them has its specific characteristics and average transfer speeds, such as: ftp, rsync, own methods, copies of objects, etc.

- Backup to the cloud, although it can be considered to be in the category of backups to remote sites (if it is considered that the computers to be supported are not in the same network (VPC) in the cloud), it is mentioned separately since there needs to be a complete structure to manage this platform, offering levels of operation and schemes that can be adapted by users, and affect the times of execution and protection of the information.

- Interconnection and intercommunication mechanisms between the servers and / or computers with the information and those where the backups are stored, which offer high speeds for the transfer of data to the backup devices, such as tape libraries. Efficient and fast backup software makes use of these as far as hardware and regulations allow, such as SAN and NAS networks, fiber networks, NDMP (Network Data Management Protocol), and so on.

In short, effective and fast backup software, in addition to allowing the execution of backups at high speeds, must also adapt in an agile way to changes in requirements and needs, such as: objects to back up, adjustments in planning, manual execution backups and restores, configuration of new jobs, changes and resizing of backup hardware, among others.

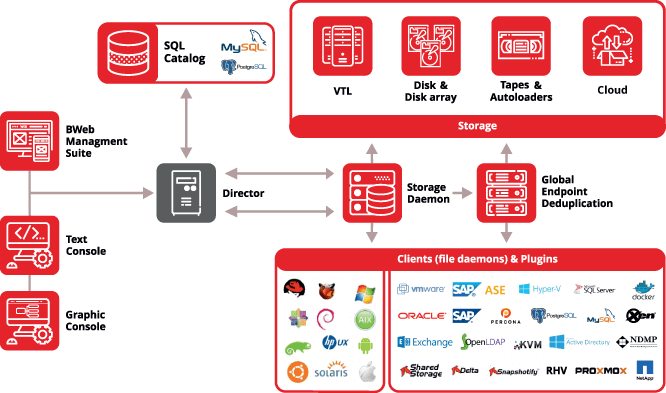

The backup application must be comprehensive and offer all the functionality to configure, manage, execute, monitor and notify all backup activities in organizations. Bacula Enterprise is especially equipped to offer exactly these functionalities, and most importantly: providing exceptional adaptability to the needs of companies.

ContentsWhat is CephFS and Why Use It in Kubernetes?CephFS fundamentals and architectureCephFS vs RBD vs RGW: choosing the right interfaceBenefits of CephFS for Kubernetes workloadsCephFS Integration Options for KubernetesCeph CSI and CephFS driver overviewRook: Kubernetes operator for CephExternal Ceph cluster vs in‑cluster Rook deploymentRemoval of in‑tree CephFS plugin and CSI migrationProvisioning CephFS Storage in KubernetesDefining…

ContentsWhat Is Epic Software in Healthcare?How Is Epic Used in Hospitals?Is Epic an EMR or EHR? Key Differences ExplainedHow Does Epic EMR Work?Essential Epic EHR Modules You Should KnowEpic Chronicles: What It Is and How It WorksHow Epic Connects to Clarity for Data InsightsIs Epic Software Difficult to Learn?Why Backup Matters in Epic EHR SystemsHow…

ContentsWhat is the Defence Industry Security Program (DISP)?Why does DISP exist and what problems does it solve?How DISP Supports Australia’s National Security and Defence Supply ChainWho administers DISP and which organisations does it cover?How does DISP relate to other Australian government security frameworks?Who needs to comply with DISP and when?Which businesses and suppliers are required…