Save disk space and money with the unique feature from Bacula Systems – Deduplication Volumes.

- No other backup software stores data this way (patent pending technology)

- Bacula Systems helps you overcome your scaling challenges

- Raising the record size limit brings a major positive impact to your storage costs

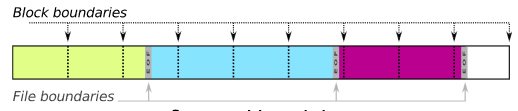

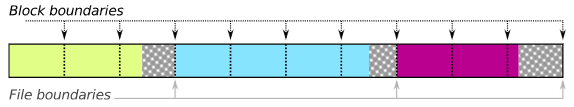

Deduplication refers to any of a number of methods for reducing the storage requirements of a dataset through the elimination of redundant pieces without rendering the data unusable. Unlike compression, each redundant piece of data receives a unique identifier that is used to reference it within the dataset and a virtually unlimited number of references can be created for the same piece of data.